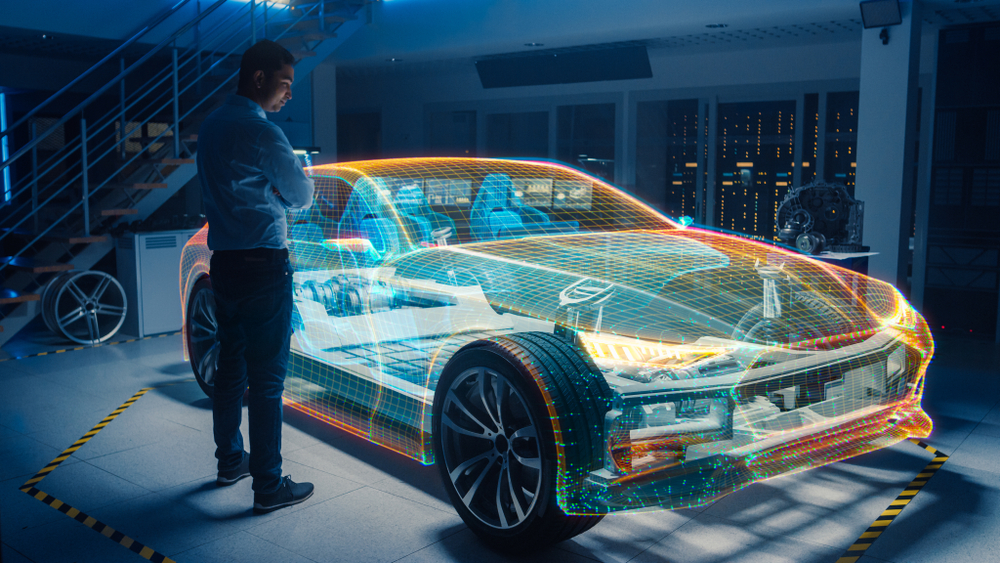

In the automotive industry, the pursuit of innovation is perpetual, underscored by the relentless evolution of technology, shifting consumer demands, and stringent regulatory standards. Amidst this dynamic landscape, the challenges confronting automakers are manifold, necessitating sophisticated solutions to navigate the complexities of modern vehicle design and functionality. At the forefront of this endeavor lies the indispensable role of Field-Programmable Gate Arrays (FPGAs), heralded as pivotal enablers in addressing some of the most pressing challenges faced by the automotive sector.

Adapting to Change with Agility and Efficiency

At the core of today’s automotive landscape lies the essential need for connectivity. Modern vehicles have transcended their traditional roles, evolving into interconnected ecosystems with digital systems and functionalities. However, orchestrating seamless communication among these varying components presents a formidable challenge. FPGAs, renowned for their unparalleled flexibility and adaptability, emerge as instrumental facilitators in ensuring cohesive integration and interoperability across diverse automotive systems, from infotainment and navigation to telematics and vehicle-to-everything (V2X) communication.

Alongside connectivity, automotive systems must exhibit robustness and resilience to withstand the rigors of real-world driving conditions. The demands placed on vehicular systems are demanding, encompassing extreme temperatures, mechanical vibrations, and electromagnetic interference. FPGAs are revered for their inherent robustness and reliability, capable of enduring the harshest environmental conditions while maintaining optimal performance. In safety-critical applications such as autonomous driving and advanced driver assistance systems (ADAS), the dependability of FPGAs is indispensable, safeguarding vehicle occupants and ensuring operational integrity.

Furthermore, the automotive industry experiences constant change, marked by evolving consumer preferences, emerging technologies, and regulatory requirements. To navigate this dynamic terrain, automakers must exhibit agility and adaptability, swiftly responding to market dynamics and technological advancements. FPGAs, characterized by their rapid reconfigurability and scalability, empower automakers to iterate and innovate expeditiously, ensuring alignment with evolving market demands and regulatory mandates.

Moreover, the imperative of energy efficiency and sustainability looms large in the automotive sector, necessitating judicious management of power consumption and data processing. FPGAs emerge as exemplars of efficiency, combining high-performance computing capabilities with minimal power consumption. Whether optimizing power management systems or processing sensor data in real-time, FPGAs epitomize the convergence of performance and efficiency, supporting the industry’s transition towards sustainable mobility solutions.

FPGAs: Shaping the Future of Mobility

FPGAs epitomize a paradigm of innovation and resilience within the automotive sector, underpinning the development of next-generation vehicles equipped to meet the demands of an increasingly interconnected and dynamic world. As the automotive industry continues to evolve, FPGAs will remain indispensable allies, driving advancements in connectivity, robustness, agility, and efficiency, and shaping the future of mobility for generations to come.

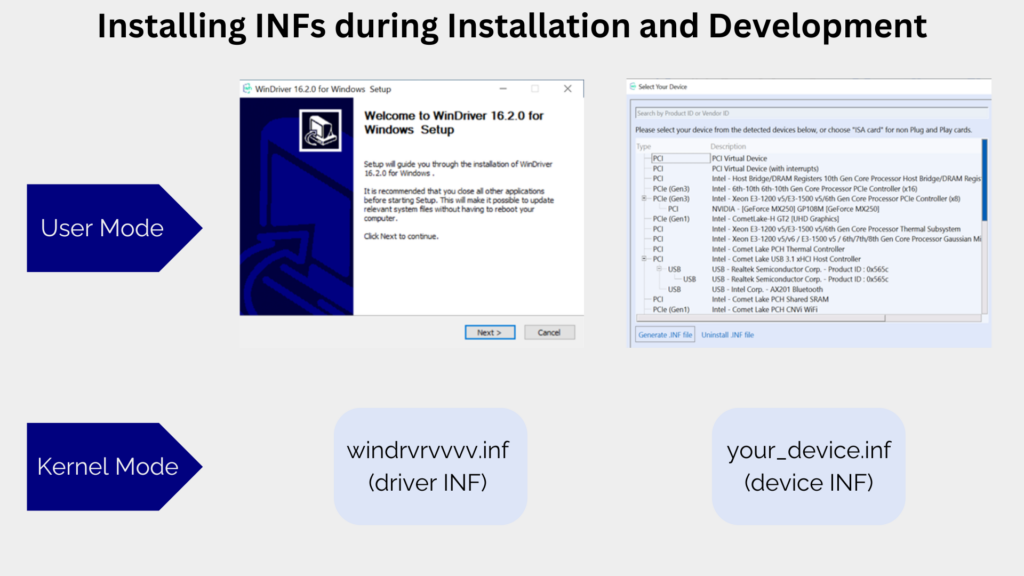

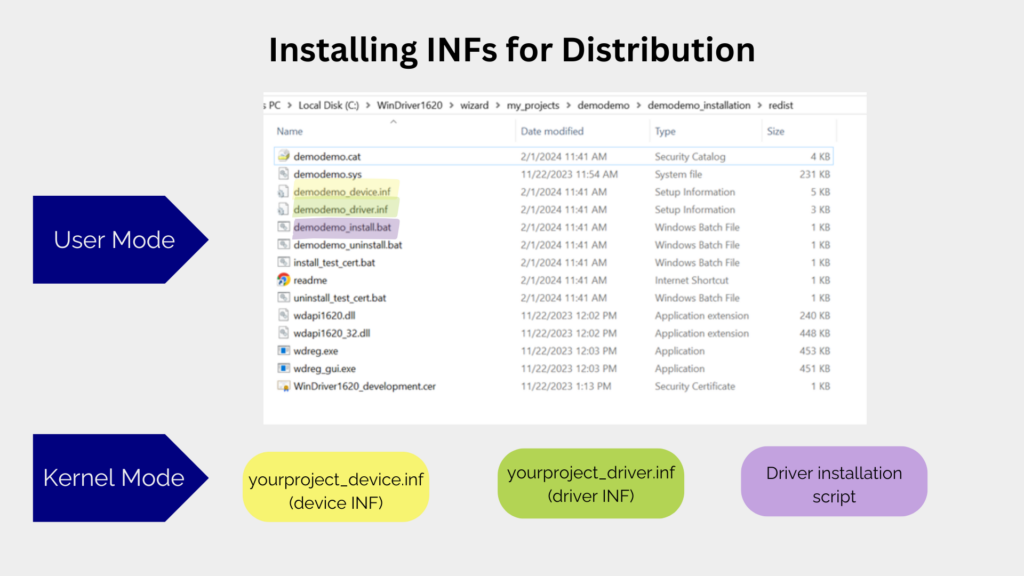

Effective driver development is crucial in the automotive industry as it directly impacts vehicle systems’ performance, reliability, and functionality. Drivers interface hardware devices, such as FPGAs, and the operating system, enabling seamless communication and control. A well-designed driver ensures optimal system operation, enhances safety, and facilitates the integration of new features and technologies. Additionally, efficient driver development streamlines the overall development process, reducing time-to-market and costs while enabling automakers to stay competitive in a rapidly evolving market.

Optimizing FPGAs: Why Driver Development Matters

Jungo’s WinDriver toolkit offers a comprehensive solution for driver development, significantly improving the efficiency, effectiveness, and cost-effectiveness of FPGA driver development. By providing a robust set of tools and resources, WinDriver simplifies the complexities of driver development, empowering developers to focus on innovation rather than mundane implementation details. WinDriver accelerates the development cycle, including automatic code generation, debugging tools, and built-in support for industry-standard protocols, ensuring compatibility and reliability across diverse automotive applications.

By leveraging Jungo’s WinDriver toolkit, automakers can streamline driver development processes, reduce development costs, and accelerate time-to-market for innovative automotive solutions, thereby gaining a competitive edge in the fast-paced automotive industry landscape.

Ready to learn more about how FPGAs can revolutionize your automotive designs? Contact us today!